I recently spent some time looking at a number of iPhone apps in the App Store to see how well they were implementing SSL. It was a little surprising to see how many big-name apps ignored SSL errors and even more surprising to see some that didn’t use SSL at all. If you want the short version, head on over to iMore.com. Here I wanted to take some time to take a closer look at the issues that I found and how I found them in hopes that other developers can avoid making the same mistakes.

Charles Proxy is a tool I’ve discussed on here previously. It’s a proxy tool that allows you to intercept traffic from your device. One of the features Charles has is SSL proxying. iOS ships with a listed of trusted Certificate Authorities on every iPhone, iPad and iPod touch. These CAs are organizations, who are responsible for issuing SSL certificates, that Apple has deemed trustworthy. Modern browsers, as well as other software and hardware, ship with similar lists that tell them which SSL certificates can be trusted and which cannot. If an iPhone, for example, goes to make a secure connection to https://google.com, the device looks at the SSL certificate and, among other things, checks the CA that issued the certificate. In Google’s case, the device would see that the SSL certificate has been issued by Verisign, and since Verisign is a trusted CA, it will accept the SSL certificate for this communication.

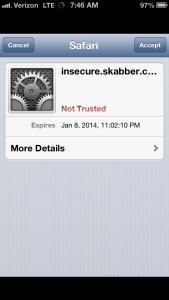

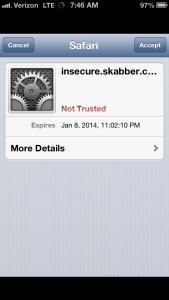

Conversely, if you were to try to connect to https://insecure.skabber.com in Safari on your  device, you would receive an alert about not being able to identify the server’s identity and asking if you want to continue anyway. This is because the certificate has been issued by the Ministry of Silly Walks, which is not a trusted CA. If an app attempts to make a connection and runs into an issue like this, that app should fail the connection. Since the identity of the server you’re connecting to cannot be trusted, the application should err on the side of caution and refuse to send any data, lest it turn over sensitive details to a malicious entity. To understand why the warnings should be heeded or what might cause them to appear, it may be helpful to give more background here.

device, you would receive an alert about not being able to identify the server’s identity and asking if you want to continue anyway. This is because the certificate has been issued by the Ministry of Silly Walks, which is not a trusted CA. If an app attempts to make a connection and runs into an issue like this, that app should fail the connection. Since the identity of the server you’re connecting to cannot be trusted, the application should err on the side of caution and refuse to send any data, lest it turn over sensitive details to a malicious entity. To understand why the warnings should be heeded or what might cause them to appear, it may be helpful to give more background here.

The reason encryption works is because a person trying to eavesdrop doesn’t have the keys to decrypt the data. But what if they did? In a man-in-the-middle attack, a malicious third party sits between you and the server, decrypting your traffic. The attacker pretends to be the server to you, and pretends to be you to the server. When you send your encrypted data to what you believe is the server, it’s actually the attacker who now has the keys to decrypt the data, because you’re the one they began the connection with. After decrypting the data, looking at it, and manipulating it if they want, the attacker then re-encrypts the data and sends it across their secured connection to the actual server. When the server responds, the attacker will be able to do the same, decrypting the data, looking at it, then re-encrypting it before sending it along to you. But in order to do this, the attacker would need to possess an SSL certificate for the server you’re trying to connect to.

As it turns out, anybody can create their own certificate authority. As such, anybody can issue an SSL certificate. Furthermore, that SSL certificate can be for any server that they want. You could go generate an SSL certificate for google.com right now if you wanted. But what would prevent you from ever being able to make use of that certificate, like in the scenario described above, is that it would not be issued by a trusted CA. You could say you were google.com, but nobody would believe you because your certificate didn’t come from a trusted source. SSL hinges on this chain of trust. And this is where some apps fail.

Instead of only accepting SSL certificates from iOS’ trusted list of CAs, some apps accept SSL certificates issued by anybody. Because of this, the man-in-the-middle attack described above is possible against traffic sent by these apps. An attacker can use an SSL proxying tool that will generate SSL certificates on-the-fly for whatever server is trying to be connected to. Since the apps trust any CA, they will allow these certificates and open up communication with the attacker, who can then view the traffic and send it on to the server. Credit Karma, Fandango, Cinemagram, Flickr, eFax, WebEx, TD Ameritrade, E*TRADE, Monster, H&R Block, ooVoo, Match.com, PAYware (by Verifone), EVERPay Mobile POS, and Learn Vest all suffer from this issue. Some companies have already responded. E*TRADE already has a partial fix available, and a more complete fix on the way. Credit Karma, TD Ameritrade say they have updates in the works. Cisco has opened tickets in their bug tracker (Cisco.com login required) and CVE for users to track the issue, and are also working on a fix. Monster responded but has not been able to say whether or not they will fix it. eFax’s stance is that once your data is outside of their network, they cannot protect it. None of the others have responded. Users should be particularly careful with buying tickets through Fandango since credit card details are transmitted.

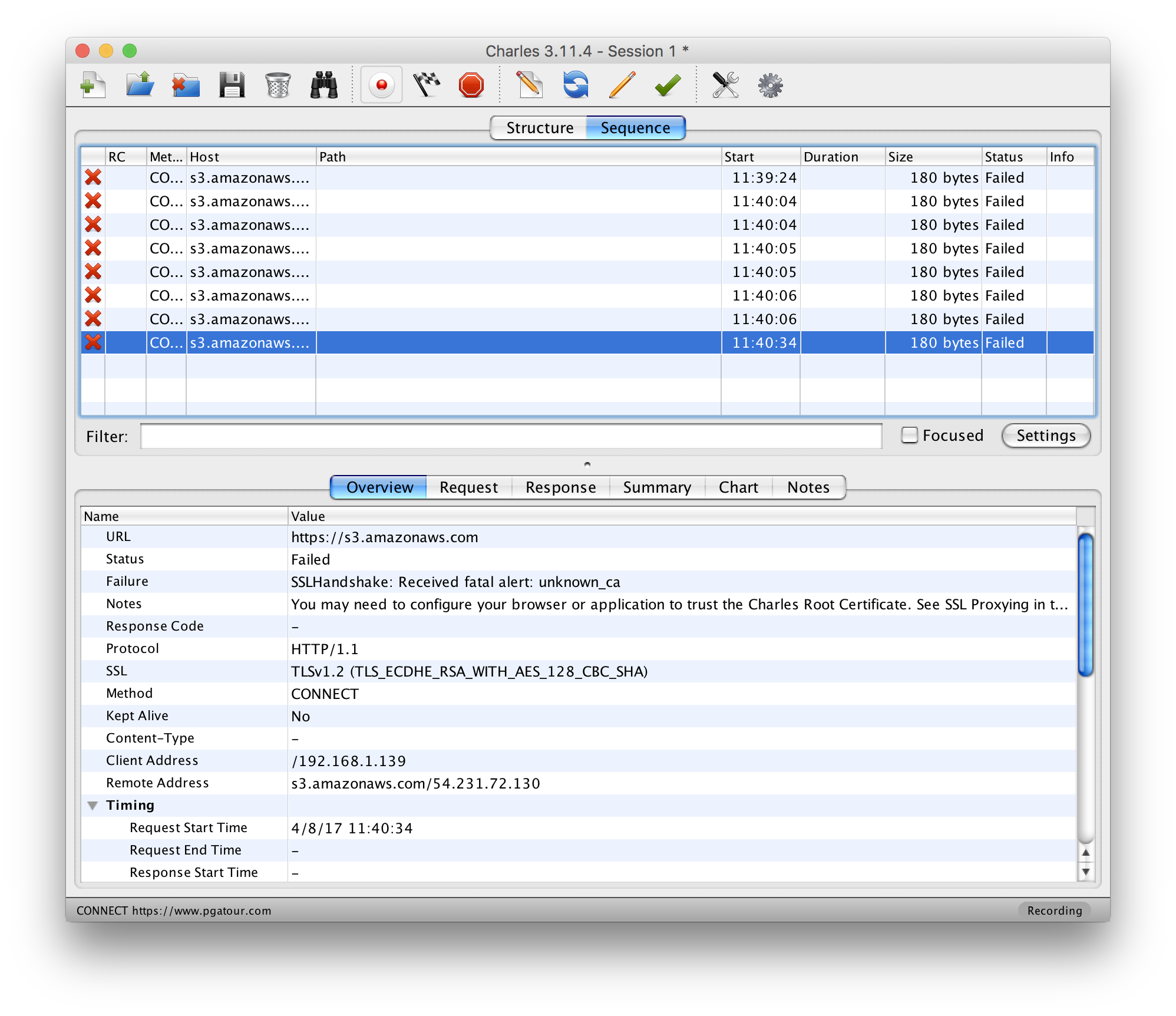

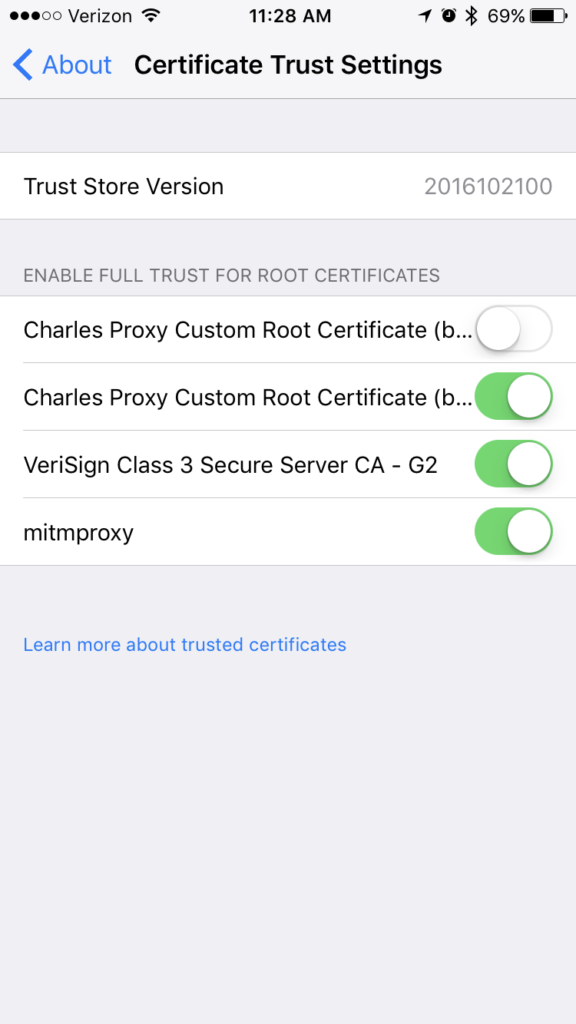

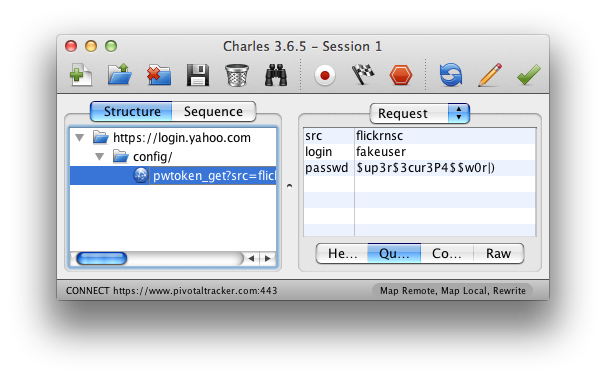

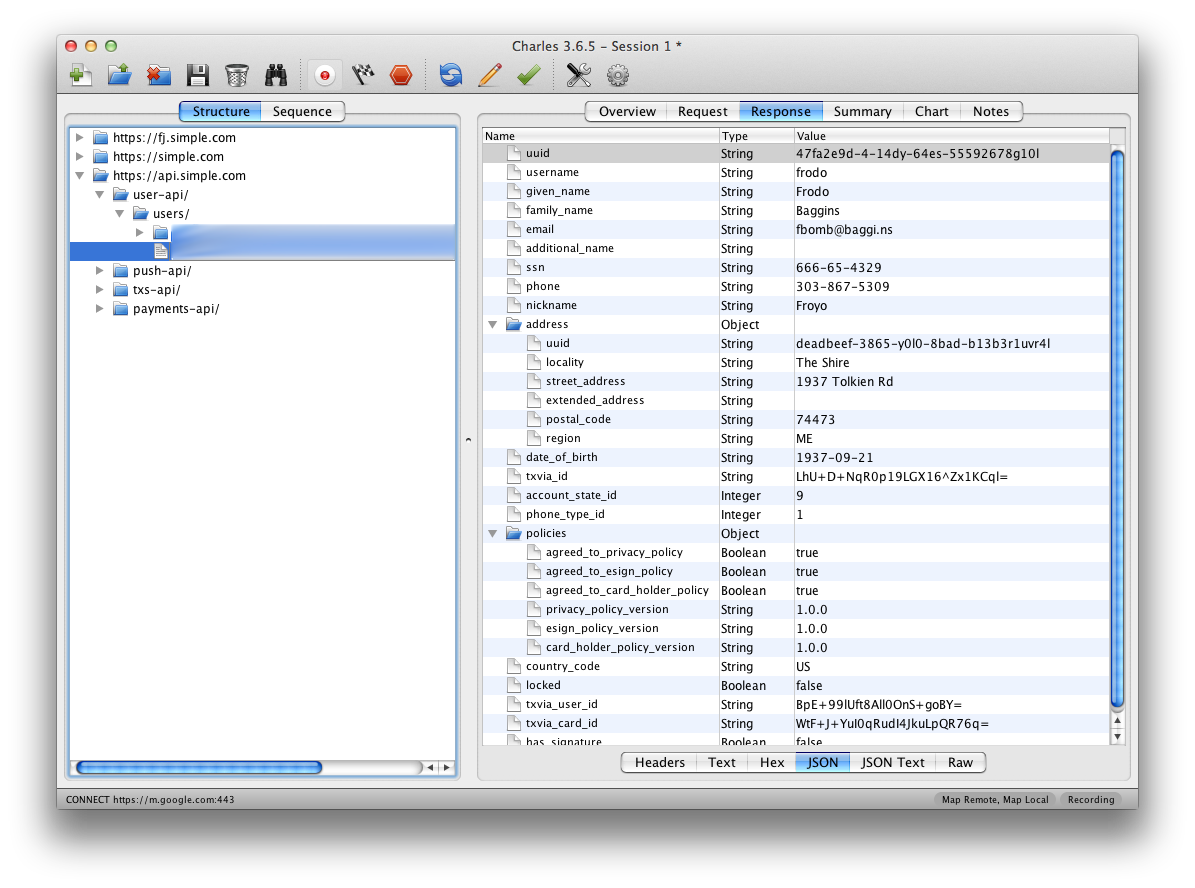

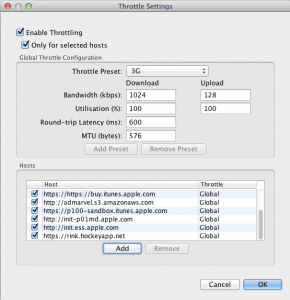

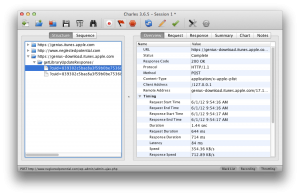

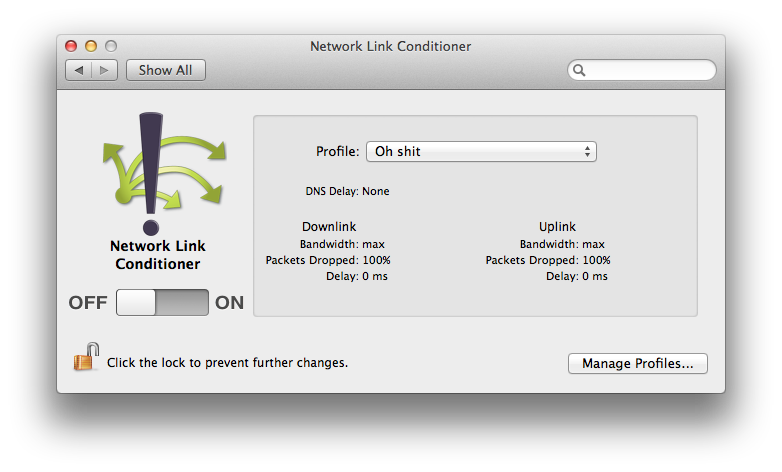

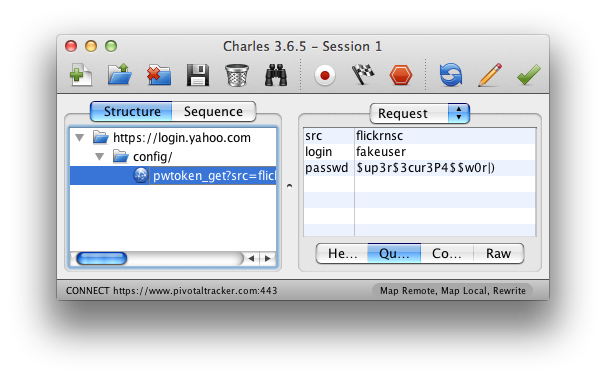

These apps are easy enough to find on your own by using a tool like Charles Proxy or mitmproxy. Normally for these tools to work for testing, you would install a certificate on your device to tell the device to trust your CA. Simply skip that step (or uninstall the certificate if you already have it Settings > General > Profiles) and fire up your proxy with SSL proxying enabled. With traffic going through your untrusted proxy, you can fire up apps like Facebook and see that they won’t work. Looking at the requests in your proxy, you’ll see that a connection was attempted, but then closed and no traffic was sent. Now, if you go and open an app that ignores warnings about untrusted CAs, like Flickr, and try to log in, you’ll see something different. You’ll see the app successfully log in and in Charles, you’ll see the request that was sent to the server, and inside it, your username and password. If an attacker were performing a man-in-the-middle attack on you, this is what they would be seeing.

While searching for apps with this problem, I stumbled upon a few that while not suffering from this, had other security problems related to logins. Flixster, iSlick, Camera Awesome!, TechCrunch and Photobucket all send some login information over HTTP. In the case of Camera Awesome!, it’s only Photobucket credentials, and in TechCrunch it’s only authentication for Pocket. But iSlick, Flixster and Photobucket all send usernames and passwords for their respective accounts in plaintext. This means that a user wouldn’t have to do any SSL certificate generation or proxying, they need only be on the same network as you and sniff the network traffic. Obviously this poses an even larger security risk than just accepting self-signed SSL certificate. Particularly if users use the same usernames and/or passwords for other accounts. In Photobucket’s case, they hash your password with MD5, but the password can easily be retrieved using a reverse MD5 hash lookup tool.

So what should be done? Well in the case of apps not using encryption, they should be using SSL for those login requests. iSlick says that SSL support will be coming in a future update. I haven’t heard any updates from the others. As for the other apps that are using SSL, but not implementing it correctly, they need to make sure that they respond to SSL warnings properly. From what I’ve seen, most networking libraries refuse self-signed SSL certs by default. Perhaps ignoring these warnings was done for development purposes and developers forgot to later pull that code out. Whatever the case, I hope each of the companies will respond quickly with updates for their users and hopefully we can all learn from their mistakes to be a little more cautious about how we implement security in our apps. If you decide to do some research on some of the apps you use, be sure to let developers know if you find a problem so they can fix it.