Effective problem solving requires that you fully understand the problem you’re trying to address. This holds true in life and in programming. Effective testing requires that you have a good understanding of what you are testing and why. Without this solid foundation, at a minimum you’ll cause some confusion, and often times you’ll end up wasting time, money and energy investigating problems that aren’t really problems. This week, a company called OptoFidelity provided the perfect opportunity to discuss this challenge that engineers and testers commonly face.

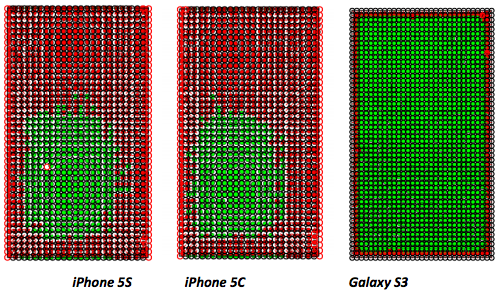

OptoFidelity is a technology company that, among other things, provides automated test solutions. They recently performed a number of automated tests on the iPhone 5c, iPhone 5s, and Samsung Galaxy S3. One of the tests that was carried out is meant to measure the accuracy of a touch panel. This test is performed by a robot which has an artificial finger that performs hundreds of precise taps across the entire display. The location of the tap is compared against where the device registered the tap. If the actual location and registered location are within 1mm of each other, the tap is displayed as a green dot– a pass. If the actual location and registered location differ by 1mm or more, then tap is displayed as a red dot– a failure.

The image above comes from OptoFidelity and shows the results of the test. As you can see, the Galaxy S3 performs very well in this test, only losing accuracy at the very edge of the display. The iPhones show a somewhat alarming amount of inaccuracy, with roughly 75% of the touchscreen yielding inaccurate results. The obvious conclusion to draw here is that the iPhone 5c and 5s clearly have subpar touchscreen accuracy, at least when compared to the Samsung Galaxy S3. But something sticks out about the iPhone results.

The green area for the iPhone results, where it registered taps within 1mm of the actual tap location, fall into an area that would be easily tappable with your thumb when holding your phone with your right hand. If you pick up your phone with your right hand, and try tapping with your right thumb, it’s easy to see that this area is easy to tap with a fair amount of accuracy. You’re not stretching your thumb as you would when you go for the top of the screen, or scrunching your thumb up too much like you would when trying to tap close to the right edge of the screen. You also wind up tapping with the same part of your thumb while in this area. Put more concisely, this is an area of the screen that you’re more likely to tap exactly where you mean to. So what about where the circles turn red? What’s going on there?

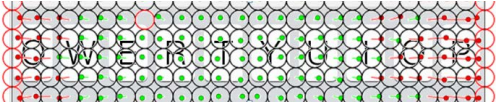

Let’s look at one specific red area that OptoFidelity calls out in their study– the left and right edges of the keyboard.

In this image, you can see the results of the touchscreen accuracy test overlaid on the top row of the iOS keyboard. In the center, over letters like T and Y, the black circles of the robot’s tap show green dots nearly centered inside, indicating that the iPhone registered the taps very close to the center of where the tap actually took place. As you move left or right of the center, you see the dots start to shift in the same direction. As you move over to the letters E and W on the left, you see the green dot moving to the left side of the actual tap circle, and by the time you get to Q, the iPhone is now registering taps 1mm or more to the left of where the actual tap took place. The conclusion of the test indicates this is a failure in accuracy on the part of the touchscreen, but is this a failure or a feature?

Looking at the displacement of taps as you move away from the green area, there’s a definite pattern. The more you move away from the easily-tappable area, the greater the “inaccuracy” of the tap. But the inaccuracy skews in a way that would make the target slightly closer to starting position of your thumb (which is likely the most frequently used digit for tapping). As your thumb stretches out from your hand, likely positioned near the bottom of the phone, the portion of your thumb that actually comes into contact with the screen when you tap changes. Your perception of the screen also changes slightly, as when you move higher on the screen, it’s less likely that you’re viewing the screen at exactly a 90 degree angle. These are factors that this automated test does not account for. The robot doing the test is viewing its tap target at a perpendicular angle to the screen. It is also tapping at a perpendicular angle every time. This isn’t generally how people interact with their phones.

I haven’t been able to find official documentation on this, but I think this behavior is intentional compensation being done by Apple. Have you ever tried tapping on an iPad or iPhone while it’s upside-down to you, like when you’re showing something to a friend and you try tapping while they’re holding the device? It seems nearly impossible. The device never cooperates. If the iPhone is compensating for taps based on assumptions about how it is being held and interacted with, this would make total sense. If you tap on a device while it’s upside-down, not only would you not receive the benefit of the compensation, but it would be working against you. Tapping on the device, the iPhone would assume you meant to tap higher, when in reality, you’re upside down and likely already tapping higher than you mean to, resulting in you completely missing what you’re trying to tap.

Commentators across the Internet have already chimed in saying “I’ve noticed this too! I’m always tapping the wrong button!” It’s a touchscreen– you’re going to miss. If the report had revealed the opposite, that the Galaxy S3 was inaccurate, you would have had a swarm of S3 users also supporting the study, citing that they sometimes tap the wrong button or key. The bottom line is, the testing performed here bears no resemblance to real-world usage. OptoFidelity tested how closely each device maps a tap to the actual position of the tap. This accuracy would be extremely important if you had robot fingers tapping very small and close tap targets at a 90 degree angle. If you’re looking for a phone to use this way, steer clear of the iPhone. What the test didn’t show is the accuracy of taps on a device relative to a user’s intended tap target. I would not be surprised if this was exactly the sort of testing Apple did when they decided to skew the touch accuracy of their devices.

This comes up in testing all of the time. In order to properly test something, you need to understand what it is that you’re testing. If you don’t understand what you’re testing, then it’s easy to misinterpret the results. Every tester out there has filed a bug, only to have it explained to them why it’s actually the expected behavior for an app (and not in the joking “it’s not a bug, it’s a feature” kind of way). A critical part of our jobs as testers is not just reporting what something does, but asking why it behaves that way. Consider this real world example. You come across a light switch that when flipped down, the lights are on, and when flipped up, the lights are off. It could be that the switch was installed upside-down. Or it could be that it’s a three-way switch and there’s another switch elsewhere that controls the same lights. In the latter case, the behavior of the switch could not be considered a bug. Arriving at that conclusion requires an understanding of what you’re testing in order to know the expected result.

I could be completely wrong about the accuracy of the iPhone. I am not a touchscreen expert, and have no proof to show what’s going on. I am in no better position than OptoFidelity to make claims about the accuracy of the iPhone touchscreen. My point is that they should be asking questions. Testers should always ask questions. Testers and engineers should always ask questions. By asking questions and trying to look below the surface, you gain a better understanding of the problems you’re trying to solve and the original questions you were trying to answer. As developers and testers, asking questions is how we build better products and yield the best results.